Ending Vendor Lock-in with a Unified AI API Platform

Delivering seamless multi-model AI access with a single endpoint, no-code portal, and playground.

Ending Vendor Lock-in with a Unified AI API Platform

Delivering seamless multi-model AI access with a single endpoint, no-code portal, and playground.

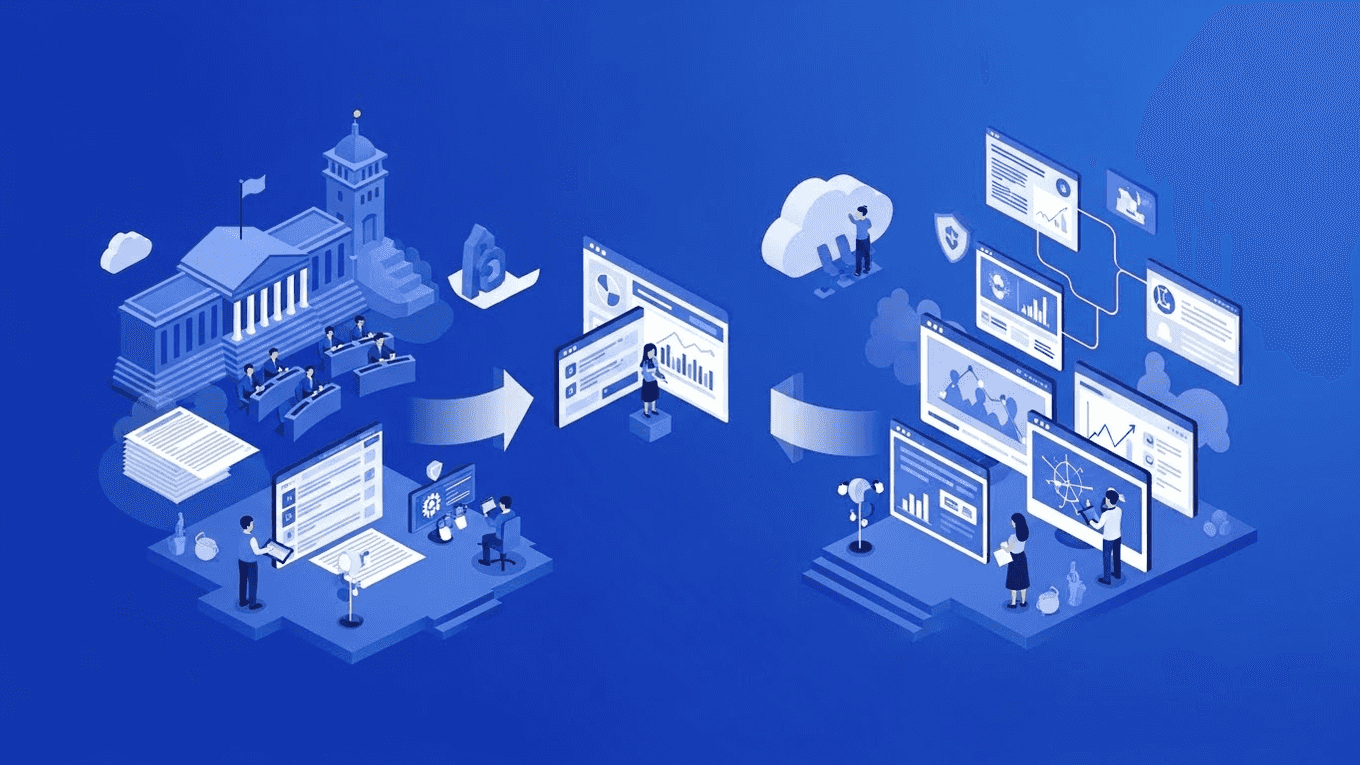

Breaking Barriers to AI Adoption

Enterprises faced mounting friction while adopting multi-model AI: - Every AI model had its own API, schema, and authentication method. - Developers spent significant time refactoring code when switching models. - Business users lacked easy tools to test AI capabilities without engineering support. - Sensitive workloads required strict data sovereignty and compliance. - Reliability was critical for high-throughput production deployments. - The client needed a unified developer experience, a no-code interface, and enterprise-grade sovereignty. These barriers slowed development cycles and limited AI experimentation, especially in large enterprises.

IMPACT

High

Adoption Bottlenecks

Solution Approach

Edstem partnered with the client to implement a unified API platform, no-code portal, and multi-modal playground: - Unified API layer exposing multiple LLMs and AI models via a single endpoint - Frontend portal for non-technical users to interact with AI models directly - Multi-modal playground enabling experimentation and model evaluation - Sovereign, high-performance architecture with compliance-grade data handling Key decisions focused on simplicity, flexibility, scalability, and enterprise-grade reliability.

Technology Stack

Unified API Platform & No-Code Tools

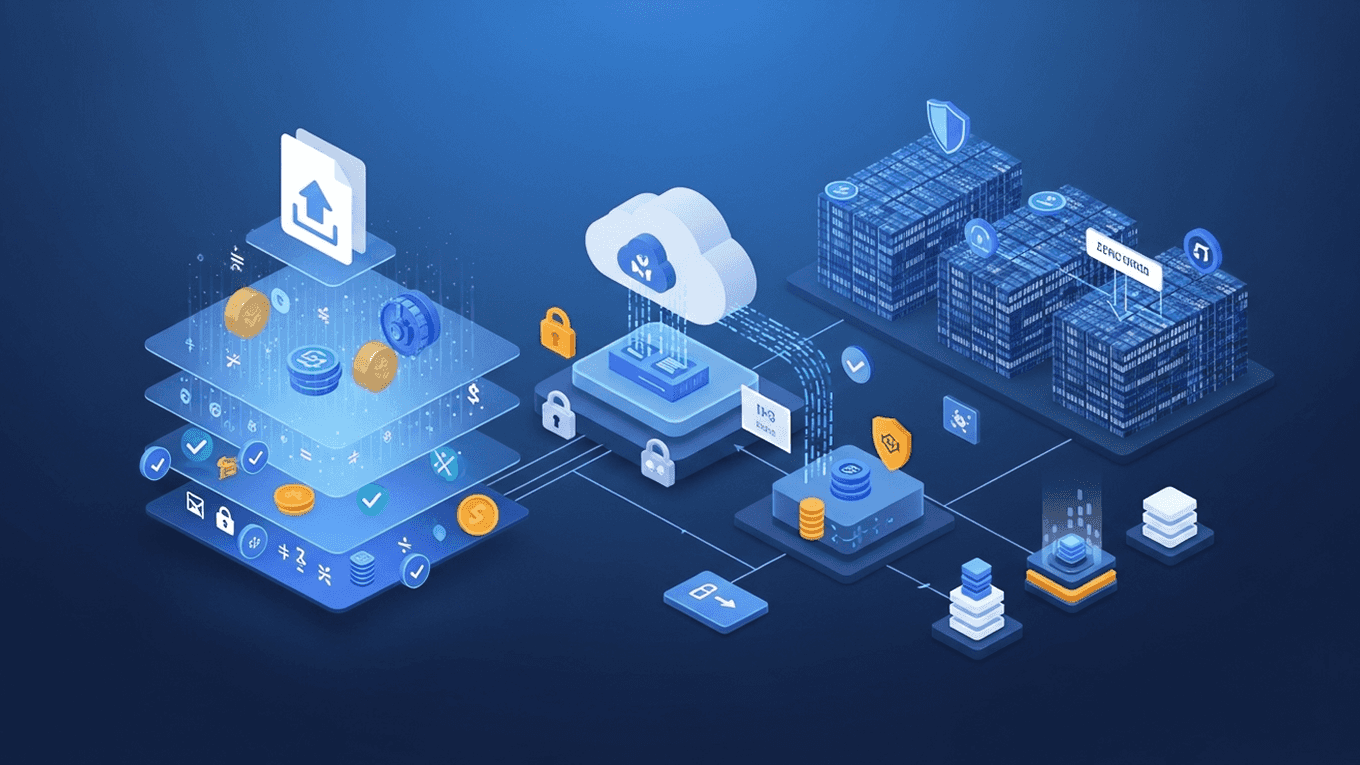

Edstem delivered a unified, sovereign API platform with complementary tools: ### Unified API Layer - Single endpoint for multiple LLMs, vision, speech, reasoning, and other AI models - Consistent request-response format - No refactoring, no re-engineering, no vendor lock-in - High throughput, fault-tolerant, horizontally scalable, multi-region load balancing - Health monitoring for LLM backends ### Supported AI Capabilities - Reasoning: multi-step problem solving, planning, tool usage, logical inference - Response API: structured outputs, function-calling, controlled JSON responses - Chat Completion: conversational agents, copilots, support automation - Embeddings: semantic search, vector retrieval, recommendation systems - Text-to-Speech, Speech Recognition, Audio Generation - Translation & Transcription - Image Generation - Reranker: relevance scoring and ranking optimization ### No-Code Frontend Portal - Interact with any LLM directly from browser - Perform chat, reasoning, speech, translation, image, embedding, and audio tasks - Compare outputs side-by-side - Access usage history and export results ### Multi-Modal Playground - Live testing of all supported models - Adjustable parameters (temperature, top-p, max tokens, etc.) - Model-to-model comparison views - Input/output history and sharing - Export settings to API cURL, Python, or JavaScript snippets ### Sovereign, High-Performance Architecture - Full data sovereignty and regional isolation - Encrypted, compliance-grade pipelines - Horizontal auto-scaling and multi-region failover - Intelligent fallback mechanisms - Advanced observability dashboards ### Industry-Standard Protocols - REST, gRPC, WebSockets, Server-Sent Events (SSE) - Async job-based processing

Unified API Layer

Single endpoint access for all AI models with consistent request-response format and high throughput

No-Code Portal

Web-based portal allowing non-technical users to interact with AI models, compare outputs, and export results

Multi-Modal Playground

Interactive platform for experimentation and model evaluation with adjustable parameters and sharing capabilities

- Rapid Prototyping

- Model Evaluation

Unified API

Single endpoint for multiple AI models

No-Code Portal

Empowers non-technical users

Multi-Modal Playground

Experiment and compare models easily

Sovereign Architecture

Enterprise-grade data security and reliability

Results & Business Impact

1. Faster Customer Adoption: Unified APIs and no-code tools reduced integration time for enterprise users 2. Empowered Technical & Non-Technical Users: Frontend portal and playground boosted adoption across engineering, product, and business teams 3. Reduced Engineering Overhead: Developers no longer maintain multiple connectors or refactor code 4. Faster Development & Deployment: Teams integrate once and unlock access to multiple models 5. Reduced Operational Complexity: Consistent API layer removes need for multiple connectors and vendor-specific code 6. Cost Optimization & Flexibility: Switch between models based on performance, cost, or availability without code changes 7. Enterprise-Grade Data Sovereignty: Sensitive data stays protected through isolation, encryption, and compliance controls 8. Reliability at Scale: High-throughput, fault-tolerant architecture ensures mission-critical applications run smoothly

Before

- Multiple AI models with different APIs, schemas, and authentication

- Code refactoring required when switching models

- Limited access for non-technical users

- No data sovereignty or compliance guarantees

- High operational complexity and unreliability

After

- Unified API layer for all AI models

- No-code frontend portal for non-technical users

- Multi-modal playground for experimentation

- Sovereign, high-performance architecture with compliance-grade pipelines

Related Case Studies

Get started now